|

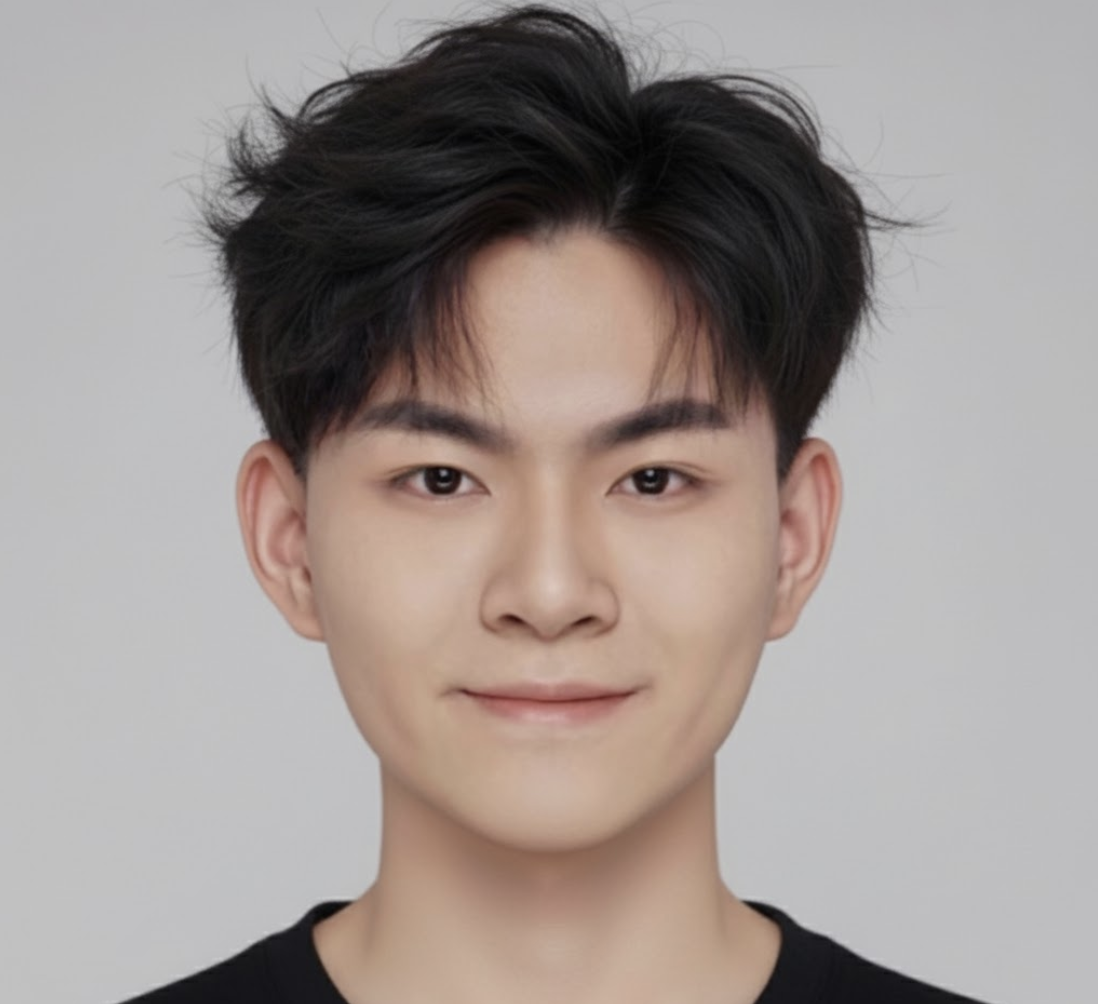

I am a 4th year Engineering Science student at the University of Toronto, where I specialize in the Machine Intelligence option. My academic and research interests are 3D Computer Vision and Generative Models with a focus on 3D Digital Humans and Image/Video Generation. I am currently an undergraduate researcher working with Felix Taubner and Professor David Lindell on Human-Centric Compositional Video Generation. Previously, I was a research intern with the Creative Vision team at Snap Research, working on Image Generation and Personalization with Guocheng Gordon Qian, Kuan-Chieh Jackson Wang, and Sergey Tulyakov, and an UTEA-funded undergraduate researcher in the Modelics Lab, with Professor Piero Triverio, working on 3D dynamic CT analysis. Outside of academics, I am leading the Research Department at UTMIST, the largest undergraduate ML community in Canada. I enjoy playing basketball and going to the gym. I have also been playing the piano and writing Chinese calligraphy since I was in elementary school. Email / Google Scholar / Github / LinkedIn |

|